Show:

Why Web Developers Have to Know Technical SEO

SEO has evolved to the point where every team member must know how to implement it effectively and successfully. The days are gone when you could just hand it off to the “SEO team,” and they would handle it.

From its initial conception and development to production and launch, a full SEO strategy should be understood and implemented by everyone involved in the development of the website — marketers, product managers, and QA teams.

Web developers play a particularly special role in SEO implementation. They’re in a unique position — right there working with the codebase — to influence not only the technical side of optimization but also on-page SEO.

Let’s take a look at where and how web developers can insert themselves into the SEO ecosystem.

What part of SEO are Web Developers Involved in?

Before things get too complicated, let’s first talk about what SEO actually is. SEO stands for Search Engine Optimization, and literally refers to the process of optimizing all the elements of your website to make it easy for users to find your site on major search engines.

The concept of SEO covers a wide range of components that relate to how a website is built. In general, these various components can be grouped into several main categories or “types” of SEO:

- On-page

- Off-page

On-page SEO

On-page SEO involves all of the content on your site and how it’s organized. Great content organized for human readability gives search engine crawlers a reason to rank you higher on the SERP (Search Engine Results Page).

Meta information, titles, keyword density, and headings are just some of the pieces of a complete, effective, and successful SEO strategy. SEO experts analyze these elements and come up with an action plan that web developers can then implement.

If an SEO team’s audit finds problems with a site’s on-page SEO — incorrect, inaccurate, or missing page elements — your site will miss crucial opportunities to rank high.

Off-page SEO

Off-page SEO has to do largely with building backlinks. Search engines use backlinks to establish a site’s authority — inbound links leading to your site show a search engine that your content is worth mentioning. Whereas with on-page SEO, development teams are actively involved, with off-page they are not.

How Should Developers Consider SEO During the Web Development Pipeline?

In order to give users the best possible search results, search engines scan and catalogue almost all of the content on the internet in a three-step process:

Crawling phase

Starting in the crawling phase from the first page it finds on the site, the crawler scans the document at a surface level. It then moves on to any other links on the page. However, all of this crawling comes at a cost. Specifically, in Google Search Engine, ‘crawl budgets’ are used to tell Googlebot how often to request content from your servers. If your site is responding slowly, it may not get crawled thoroughly. With that said, most developers don’t need to worry about crawl budgets unless their site has more than a few thousand URLs, or auto-generates pages based on URL parameters.

Rendering phase

In the rendering phase, search engines use WRS (Web Rendering Service) technology to execute and render the embedded content. This allows them to assess the data inside your site’s videos, images, and other embedded media.

Indexing phase

Lastly, during the indexing phase, search engines create a modified copy of your website and catalogue it into their databases. Doing so allows search engines to optimize search times and deliver search results quickly.

What Role do Web Developers Play?

Web Developers who are familiar with each part of the pipeline can make critical decisions about the technology stack their clients need. For example, libraries like ReactJS are difficult for search engines to crawl, render, and index. Pages that can’t be crawled don’t show up in SERPs.

Why do Web Developers Need to Know About Crawling and Rendering Specifically?

Web Developers can help search engines index their websites more efficiently by using three technical SEO components used in the crawling phase. These are:

- Sitemaps

Sitemaps are important files that contain information that helps web crawlers understand which pages, videos, and other files to crawl and index on your site, as well as how to prioritize them. There are two types of sitemaps, and as you’ll see, both are necessary for the best technical SEO practices.

- An HTML sitemap is simply an HTML page listing all of your pages with appropriate links to each of them. It’s human-readable and useful for both people and crawlers.

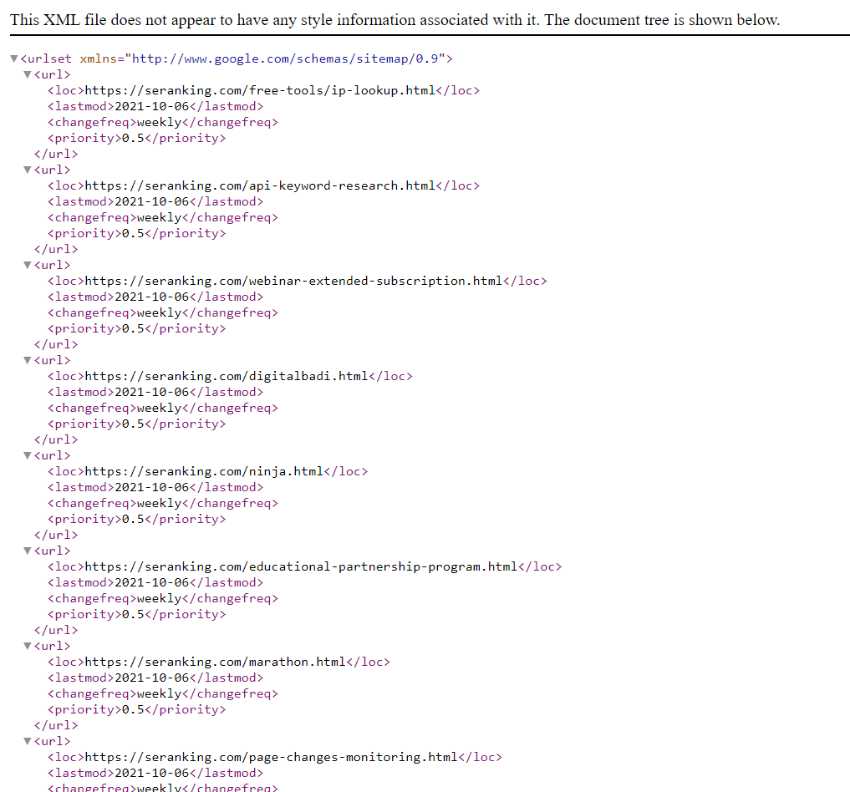

- An XML sitemap is needed to show your website’s structure to the search bot. It can be automatically generated. Here is an example of seranking.com’s XML sitemap:

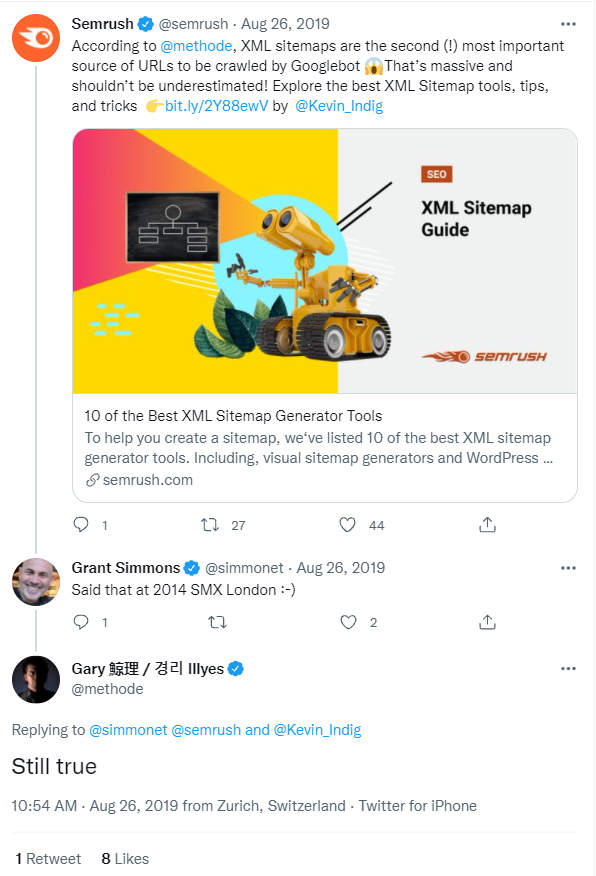

According to Gary Illyes, a webmaster trends analyst at Google, XML sitemaps are the second most important source of URLs crawled by Googlebot

- Robots.txt and ‘robots’ meta tag

A ‘robots.txt’ file works by allowing or disallowing crawlers from accessing specific areas of a website. This is a critical piece of SEO that developers should be aware of for two reasons.

- Certain pages of a site might harm ranking — technical pages, pages with too many links, unorganized information, poor readability, etc.

- Sensitive or secure data on parts of a site could lead to data breaches when indexed.

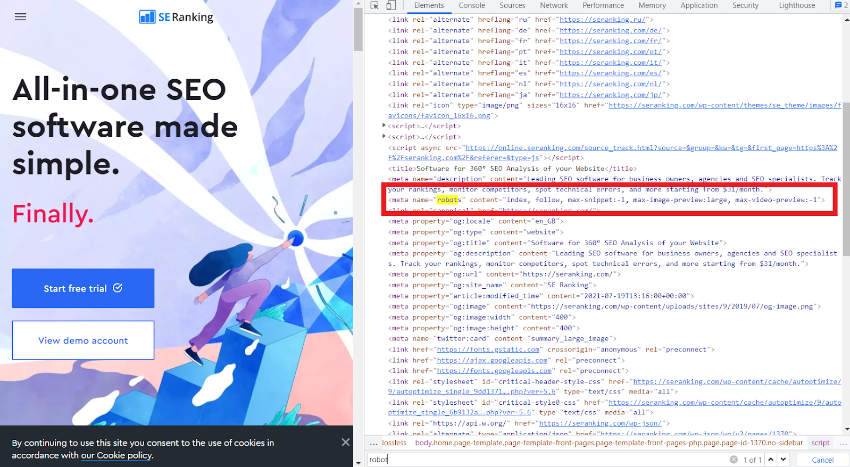

The ‘robots’ meta tag works similar to the ‘robots.txt’ file, however, there is a key difference: the ‘robots’ meta tag is included in the ‘head’ of an HTML file and tells search engines how to handle indexing and link-following on a page-specific level. These directives are usually added onto the website by developers. On the other hand, ‘robots.txt’ allows developers to influence website indexing at a macro-level — for example, providing custom indexing rules for different search engines (user-agents), as well as defining the sitemaps.

As you can see, ‘robots’ meta tags are where the content attribute has the value “follow”. This tells search engines to follow all links on the specific page.

- ‘noindex’ meta tag

The ‘noindex’ directive is a parameter in the ‘robots’ meta tag that tells search engines not to index a page — essentially not to add your page to the SERP. Be careful though, because just like in a ‘robots.txt’ file, the ‘noindex’ parameter is a suggestion that search engines can choose to ignore, and your content may be indexed anyway.

What Can Web Developers, SEO Experts, and Marketers Learn About SEO to Help Search Ranking?

Structured Data

Web Developers, SEO Experts, and marketers don’t have to create every piece of promotional content to increase their SEO ranking. Nonetheless, they do need to supply enough information to search engines — specifically Google — to do it for them.

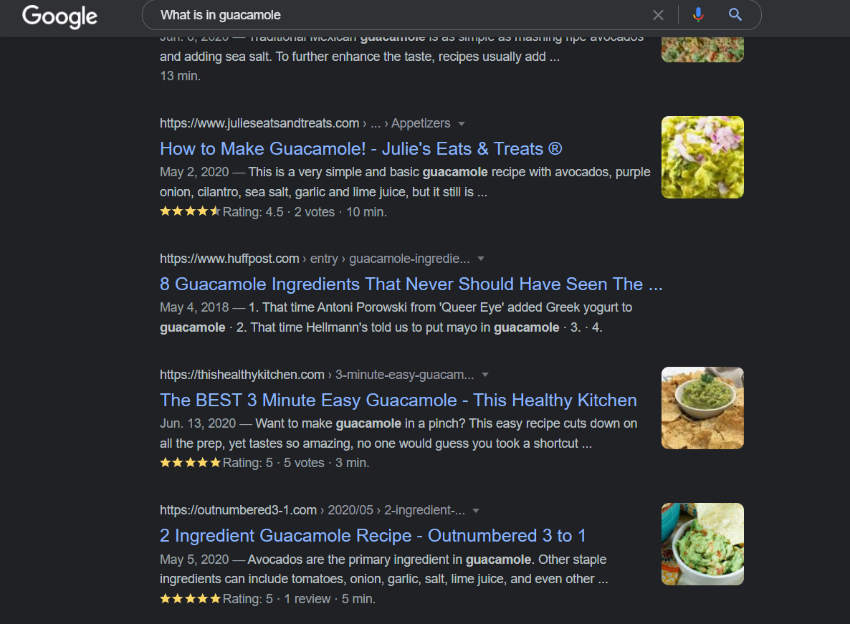

Structured data, also known as ‘Rich Results ‘, is an additional way to deliver the details that your users might need. These are additional elements that appear in the SERP along with your regular blue link, title, and description.

As you can see, implementing structured data helps show images and starred reviews in the SERPs, along with videos, event listings, and much more.

Why Does it Matter for SEO?

The main objective of structured data is to show your website in the SERP correctly, in an engaging way, and to increase CTR.

What Role do Web Developers Play?

Structured data has to come from metadata that is added to your site’s code. This is where Web Developers become involved. By naturally having access to a website’s code, Web Developers can readily add in the necessary metadata for crawlers to create rich results.

Why do Web Developers Need to Know About Writing Structured Data Specifically?

Structured data doesn’t need to be a costly or aggravating side project. If they choose to, developers can use a comprehensive structured data reference catalogue for schema information, as well as additional guides on how to add structured data into their sites for the article, image, video, and product content. Or they may use a helper supplied by Google to help auto-generate finished code.

What are some of the Best SEO Practices for Web Developers?

User experience should be at the forefront of design and implementation for everyone involved in website building and management. Search engines are getting better at indexing and ranking based on natural human readability rather than the old way of keyword stuffing. Here are three areas where technical SEO can be optimized:

Loading speed

The time your pages take to load is now a factor in how Google ranks your site. This is especially true for the mobile version of your site. If a given page takes more than 3 seconds to load, most users will have already left before it’s loaded. Poor usability and user navigation experience can lead to higher bounce rates and ultimately a lower ranking in SERPs.

Slow loading speeds can also affect how bots treat your site when they perform crawling. According to Google, “Making a site faster… [increases] crawl rate. For Googlebot, a speedy site is a sign of healthy servers, so it can get more content over the same number of connections.”

Responsive web design

Responsive web design benefits your SEO and is even recommended by Google for better rankings. According to Google, responsive websites show better performance in SERP since they offer an improved user experience. Responsive websites also load much faster, making it possible for users to share and link to your content with just a single URL.

SEO experts prefer a responsive version since these websites have one URL and similar HTML, and Google is able to crawl, index, and organize content from just a single URL more quickly and efficiently.

HTML Metadata

There is so much that goes into building a website that metadata is often overlooked or underserved. The multiple HTML elements of a website need to have SEO-optimized information, which can make the process a hassle. But with preparation, it’s easy to enter the information during development, as opposed to after.

Why Does it Matter for SEO?

How fast your site loads, what it looks and feels like on mobile devices, and the overall user experience are three key areas that can make or break your site in search engine visibility. It’s reasonable to assume that slow, unresponsive, and non-inclusive websites will rank lower in search engines, especially considering that in March 2020 Google announced they would be switching to ‘Mobile First Indexing’ in September of 2020.

What Role do Web Developers Play?

Web Developers have the tools to measure and influence loading speeds and responsiveness, more than anyone else involved in the website development and production pipeline. They also have the HTML used to bring a site together. Working together with all teams, they can decrease the size of unnecessary media files, create mobile-optimized content, and fill out necessary metadata the best.

Why do Web Developers Need to Know About Responsive Web Design Specifically?

More than half of all web traffic in 2021 was performed on mobile devices and Google is now doing mobile-first indexing. Developers need to be building sites with mobile as a first priority, and desktop second. It’s much easier to expand content and change how it’s organized than to contract the same information and lose details and functionality as screen space shrinks.

The Bottom Line

It’s no longer only the SEO team’s job to make sure websites rank in the top 10. It’s a group effort from all team members, but especially Web Developers. Getting SEO right the first time saves time, energy, and money. And since responsiveness is the future bedrock of how Google will rank web pages, getting ahead of the pack as a Web Developer is the best SEO strategy.

Return to Previous Page

Return to Previous Page